In the Forex market, trading Binary Options is a golden opportunity to earn money. Nowadays, especially after 2020, the value of virtual currencies, commodities, indexes, and others has increased rapidly. That’s the reason why everyone is looking for a way to invest in binary options.

However, Binary Options trading has one limitation. Experts address it as an opportunity instead of a drawback. That depends on person to person. The thing is, some special binary market hours are best for trading.

In simple words, if you want to gain a high profit by doing Binary Options trading, you have to focus on these special hours. Here, in this article, you’ll have a complete guide on why binary trading on special hours is important, what those times are and how to trade efficiently at that time.

See my full video with examples:

Further information:

- https://www.timeanddate.com/time/map/

- https://www.investopedia.com/terms/o/openoutcry.asp

- https://www.cmegroup.com/education/courses/introduction-to-futures/trading-venues-pit-vs-online.html

- https://www.newbie-trader.com/pit-session-times/

(Risk warning: Your capital can be at risk)

Why are Binary Options market hours important to traders?

It will be easy for you to understand if you take the stock exchange as an example. Do you know what the fundamental difference between the stock exchange and binary options trading is? It’s time. The stock exchange is done for a limited period where binary options trading is active 24/7.

Based on this difference, some reasons for Binary Options market hours are shown here.

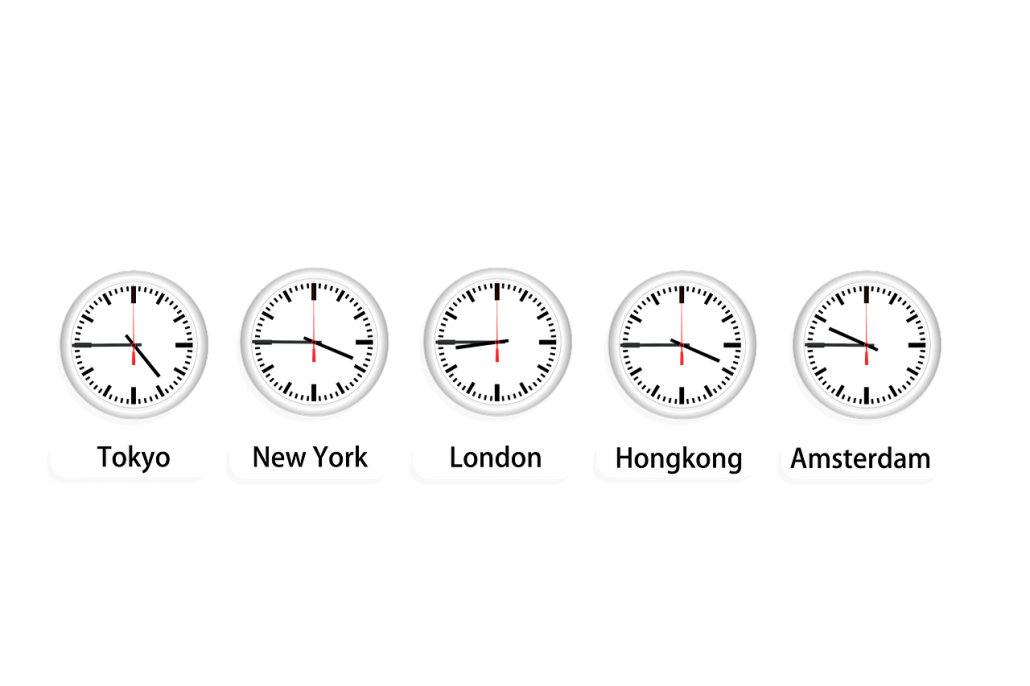

#1 Different time-zone

Different time zone always matters in trading. However, the most active three home stock exchanges do the same. American stock exchange, Japanese stock exchange, and British stock exchange follow their respective timetable.

Binary options trading is a part of the stock exchange. However, Binary trading can be done anytime. Depending on the Binary Options trading you are doing, the time range may be different as it is global.

Now, suppose you are active at a particular time in America when the Japanese stock exchange Binary Options trading is going on. Certainly, there is no point in trying and trade on the American exchange at that time. So, no matter which country you are from, maintain the specific time to gain profit.

#2 Volume of the traders and trades

Several factors act behind making the market volatile. As you know, volatility is not only referring to the sudden price fall. It also represents the sudden rise in the price of stocks or currencies. So as a pro, it would help if you focused on positive activities in the market.

As mentioned earlier, the top three home stock exchanges are following different time regions. As a result, overlapping of time is natural. Those who are doing the professional level of trading don’t stop themselves in only one place.

So, what will be the result if the number of traders gets reduced because of time overlapping? The volume of traders on particular trading will be reduced too. Eventually, the price won’t proceed to positive volatility. That’s why knowing the exact time for binary trading is important. The more the volume of traders, the more volatility the result will be.

Because of these two reasons, you need to know about the time of Binary Options trading. Here, the best binary options trading times are mentioned.

When is the best time to trade Binary Options?

First things first. The binary trading time in India differs from the best times to trade binary options in the US. Therefore, traders who ask themselves ‘What is the best time to trade binary options in India’ have different answers to their questions.

Probably you are thinking, how can anyone fix a time for best binary trading without knowing everyone’s trading choice? Because some traders can handle high-volatility, and high-volume markets, whereas others perform better in low-volatility and low-volume markets.

All the timetables are being fixed upon these strategies. During the night of the respective country’s stock exchange, the volume remains lower. Where on the other hand, during day time, the volume becomes quite higher than at night. Let’s see when to trade binary options.

Best hours for currencies:

| Region: | Starting Time: | Ending Time: |

|---|---|---|

| New York (USD) | 8.00 EST | 17.00 EST |

| London (GBP) | 3.00 EST | 12.00 EST |

| Tokyo (JPY) | 19.00 EST | 4.00 EST |

| Europe (EUR) | 2.00 EST | 11.00 EST |

Trading between 5 am to 12 pm (GMT)

- EUR/JPY

- GBP/USD

- USD/CAD

- NZD/USD

- USD/JPY

Trading between 12 pm to 7 pm (GMT)

- EUR/USD

- AUD/USD

- NZD/USD

- USD/CHF

- USD/CAD

- GBP/USD

- GBP/JPY

- EUR/GBP

Trading between 7 pm to 5 am (GMT)

- USD/CHF

- USD/CAD

- GBP/USD

- EUR/JPY

- NZD/USD

Out of these, most forex trades are executed between 12 pm to 7 pm GMT.

Best hours for commodities:

| Commodities: | Starting Time: | Ending Time: |

|---|---|---|

| Corn | 9.30 EST | 13.15 EST |

| Crude Oil | 9.00 EST | 14.30 EST |

| Silver | 8.25 EST | 17.15 Est |

| Gold | 8.20 EST | 17.15 EST |

| Natural Gas | 9.30 EST | 17.15 EST |

Best hours for stocks and indices:

| Stocks & indices | Starting Time: | Ending Time: |

|---|---|---|

| USA | 9.30 EST | 16.30 EST |

| Europe | 2.00 EST | 11.00 EST |

You can find the overlapping active periods on the chart, making it will be easy for you to find the closing gaps, runway gaps, and breakaway gaps. Professionals are suggesting this period after doing thorough research.

Many traders ask themselves ‘What is the best time to trade binary options‘, ‘What is the best time to trade Pocket Options‘ or ‘What is the best time to trade in Quotex‘ Let’s see how you can use the best times to trade binary options properly.

- For those traders who are willing to trade on American and British stock exchange Binary Options, the time between 8.00 to 12.00 EST is best. Here, both the low and high-volume strategies will be applicable.

- For British and Japanese traders, the best time frame to trade binary options ranges between 3.00 to 4.00 EST. Within this hour, a lot of movement is recorded. So, experts suggest being active then.

- If you belong to any other country in the world and want to invest in these stock binary exchanges, you probably have to wake up at night. First, research the preferable stock country for you. Then adjust the timetable with yours.

- Usually, the best time for binary trading in India is around 7.00–12.00 GMT and 18.00–0.00 GMT. Here, most traders are active, giving you the chance to participate in a highly volatile market with plenty of opportunities to place your trades.

These plans are applicable for stock exchange binary options trading. Have you ever thought about currency trading? Though currency trading is taken under stock exchange trading. Currency binary trading is open 24×7.

Therefore, the best time to trade on Pocket Option has the same answer as the question of ‘What is the best time to trade binary options in the USA?‘ – It all depends on your timezone and country of residence. Take a closer look at our comparison tables or ask the broker’s support team about the most active binary options trading hours. Usually, these are the best time frames for binary options trading.

So if you can implement all your effort and match up with the given time duration, your cryptocurrency, and Bitcoin binary trading will be profitable. The choice is yours. Take action to generate a high profit. Let’s see how to implement these times efficiently in trading.

(Risk warning: Your capital can be at risk)

How to use Binary trading times efficiently?

As the thing about Binary trading times is clear to you, now it’s time for implementation. Brokers make mistakes, and beginners are especially vulnerable to making mistakes that cost a lot of money. So, follow the following instructions to have a profitable trading experience.

#1 Play efficiently and strategically

If you are on a trading platform, you must have some strategies to follow, but are those effective in the case of binary trading? Be assured about that first. Then, if you can keep up with the strategy, beneficial revenue is waiting for you.

Pick up a time. It would be better to select an overlapping period. For example, take the overlapping period between the British and American stock binary exchange. Now you need to be specific about at least two hours between the overlapping time.

Fix only one hour or a maximum of two hours. Don’t exceed the limit. Do proper research about the market conditions in those countries and watch over the pips. If you are getting 15 pips at least, that’s enough. Some are chasing for 30 pips, but often that becomes the reason for the loss.

A hectic pressure works on everybody when they start trading within a limited time. Often our optimistic mind fails us to follow the strategy. Don’t do that. Whatever the situation is, don’t go farther though you have time.

#2 Use the early time of Binary Trading

As the best time for binary trading is given, don’t be active for that time only. You need to sit in front of your desk at least one hour earlier. Why? The reason is to fix the moves. There is nothing precious tool than your brain.

As binary trading is highly related to market movements, and most traders belong to different countries, it will be hard for traders to know the current situation. Ensure you’re following market experts’ calls and have all the tools you use ready to go.

Many traders will compete with you during the best hours. However, not everyone will be as dedicated as you. Follow the words, and be a champion of ‘overlapping hours.’ Use those best hours to take the necessary steps according to your strategy.

#3 Don’t push your luck

This is not related to binary best hours. This is for you. You are the only person who can assure whether you’ll win or lose. Pushing luck is the best drawback in trading. And it is what makes the difference between gambling and trading.

Never push yourself in greed for more. Always remember, in trading, there is an unwritten rule, less is more. Be satisfied even if you’re getting 15 pips for any binary options trading. After doing the research, if you are confident, then go for 20 pips maximum.

Rather than doing over-trading on a specific stock or crypto or Bitcoin, you can try trading on multiple stocks. Even you can try different regions from the best three stock countries. Options are there, but choosing those properly is important.

The stock markets and their timings

The maximum trades are made using the stock exchanges. These four markets are considered as the major stock markets:

- The New York Stock Market

- The Tokyo Stock Market

- The London Stock Market

- The Hong Kong Stock Market

- The Sydney Stock Market

The binary market timing for your trade is important because these markets open and closes at different points. You will find these markets active according to your location or time zone. All these markets remain open for approximately 9 hours. According to the Greenwich Mean Time (GMT) Zone, the Sydney market opens when the New York market closes. The London market partly covers the time when Tokyo and Ney York market operates.

Here is the Stock market time:

- Sydney – 9 pm to 6 am GMT

- Tokyo- 11 pm to 8 am GMT

- London-7 am to 4 pm GMT

- New York – 12 pm to 9 pm GMT

- Hong Kong – 1:30 am to 8 am GMT

This lets you trade the binary options 24 hours a day and opens numerous opportunities for you to seize and gain profit.

What time does the Binary market close?

The binary options are a versatile market with numerous assets available to trade in. but all these assets are not present all the time. This is because different places have different time zones.

In the binary market, forex and stocks are heavily traded, especially during the overlapping of major stock market time.

The US stocks are widely popular, and they are usually opened between 1:30 pm GMT to 8 pm GMT (or 9:30 am EST to 4 pm EST). the activities face a drop between 4 pm GMT and 5 pm GMT. (12 pm and 1 pm EST).

Well, what are the best days to trade binary options then? It depends on the asset. On the weekdays, European stocks like Xetra Dax and FTSE are traded from 11 am to 7:30 pm GMT. These are the standard times when the binary market opens and closes. Weekdays are considered to be the best. However, if you are interested in crypto binary trading, the weekday is not relevant. They are available all the time.

The trading time highly depends on the binary options trading platform you are using. You can always contact customer support to know the trading times for your desired assets.

Strategies you can use during weekends

Due to low activities on weekends, the market is not that responsive. Hence, planning and strategizing the weekend trading on binary is the best option to gain profit.

Following are some most basic and widely used strategies:

#1 Gap trading

Gap trading means price jumps and is used in the forex market. This trading strategy is best for the weekend as you can trade the gaps in the currencies. The price jumps are caused when some force moves the market and triggers the price to go from one level to another whilst skipping some price levels in between.

- What are the reasons for gaps?

There are many reasons for the occurrence of price gaps like, when the volume is high, they can be created once the new movements are about to start.

Closing gaps are witnessed mostly on weekends because usually, these days are taken off by other traders, and the new movements are highly implausible. And not a large number of traders are required to form the closing gaps. When those traders invest in the same direction, other traders believe it to be a mistake, and start investing in another direction.

For the upward gap, they will sell their assets, causing a fall in the market, and eventually, the gap will close. In the downward gap, the investors will start purchasing assets, causing a rise in the market again, which will lead to a close in gaps. If there is a low-volume market on the weekends, brace yourself, as the chances of closing the gaps are high.

How to apply the strategy?

If you believe the gap is definitely going to close, you can then easily perform your trades because: First, you are aware of the price target, and second, the expiration time. With this data, only you can trade the high and low types of currencies and even the commodities.

#2 The Bollinger Bands

Put forward by John Bollinger; these are statistical charts that depict the volatility and the price of an asset over a period of time. This signals you a price channel that the financial market is improbable to set aside.

Bollinger bands are said to be high in prediction, especially on the weekends. There are three lines:

- Upper line: operates as a resistance level.

- Middle line: could be support or resistance level.

- Lower line: works are a support level.

- Bands for weekends

The Bollinger bands are extremely helpful during weekends, and using them properly will give you maximum benefits. The reason behind this is that with more traders and events happening in the financial industry, the movements will increase, leading to variations in the Bollinger bands.

But if the market has low volume, it will become stable, and the chances of any extensive action are less likely to happen. This makes the bands more precise and beneficial.

How to apply the strategy?

First, you have to open your trading platform and choose the desired asset. Then open the price chart and use the Bollinger Bands. Secondly, let the market reach the lines of the Bollinger band. And at last, make your forecast about the market turn around. You can use the basic trade option like high/low for predicting that the market will not violate the Bollinger band.

These are some of the standard approaches which can be used. However, you can formulate your own tactics also. There are many different ways to generate prospects on the weekends.

Conclusion on the best hours and times for Binary trading

There are various other countries available with open stock exchange binary trading. However, investing in these is best. Why? The reason is the value of the currencies. Apart from Bitcoin and other cryptos, if you think about trading, these countries are gems.

One other reason regarding time overlapping has been explained too. So, if you keep a good base for yourself and follow the best times mentioned for the respective countries, a huge profit is waiting for you.

Conclusion on the tips:

- Start trading on main market hours (applies to every asset)

- Forex also has main market hours

- Use the stock exchange opening time in Europe and the USA for the best volume

- Do not trade when the stock exchange is closed

- Can you trade binary options 24/7? Yes, cryptocurrencies are available 24/7

(Risk warning: Your capital can be at risk)

Vimukthi

says:Can I add 10 USD to my binary account, sir?

Bayo Akinleye

says:Thanks for the expository analysis of the BT times. Can you share with me the working Strategies you’ve been using alongsue the Broker.

Brian Wallace

says:What is the best timeframe to trade

Brian Wallace

says:So if I use 5 min candle 10 minutes expiration