Focus Option review – Scam or not? – Test of the broker

- Crypto options

- Multiple payment methods

- High profit up to 88%+

- User-friendly interface

- Personal support

- Fast registration

Talking about trading, the cryptocurrency market is growing rapidly. In fact, it has emerged as an amazing way to trade digital assets easily. But with the popularity of binary crypto trading, the number of platforms allowing its trading has also increased. So, choosing the right one is not that easy.

But don’t worry because we have thoroughly analyzed the market and have found the best trading platform. It is called Focus Option. This remarkable platform is easy to use and has become a trader’s favorite in no time.

Keep reading to learn more about this platform.

(Risk warning: You capital can be at risk)

Quick facts about Focus Option:

| ⭐ Rating: | (5 / 5) |

| ⚖️ Regulation: | ✖ (not regulated) |

| 💻 Demo account: | ✔ (available, unlimited) |

| 💰 Minimum deposit | 10$ |

| 📈 Minimum trade: | 1$ |

| 📊 Assets: | 40+ Currency pairs, 20+ Cryptocurrencies, 60+ Commodities, Stocks & Indices |

| 📞 Support: | 24/5 customer support via phone, chat, email |

| 🎁 Bonus: | No bonus available |

| ⚠️ Yield: | Up to 88%+ |

| 💳 Deposit methods: | Credit cards (Mastercard, Visa Cards), Debit cards, Cryptocurrencies (Bitcoin, USDT, and more), Bank transfers, Local payment methods, e-wallets |

| 🏧 Withdrawal methods: | Credit cards (MasterCard, Visa Card), Debit cards, Cryptocurrencies (Bitcoin, USDT, and more), Bank transfers, Local payment methods, Electronic wallets |

| 💵 Affiliate program: | Available |

| 🧮 Fees: | No deposit fees. No withdrawal fees. Spreads and commissions apply. No hidden fees. |

| 🌎Languages: | English, Portuguese, Spanish, Japanese, Malaysian |

| 🕌 Islamic account: | Not available |

| 📍 Headquarter: | St. Vincent and the Grenadines |

| ⌛ Account activation time: | Within 24 hours |

(Risk warning: You capital can be at risk)

What you will read in this Post

See our full review video about Focus Option:

(Risk warning: You capital can be at risk)

What is Focus Option? The broker presented:

Focus Option is a new binary options broker, as it was founded in 2021. This might make some people doubt what this broker has to offer. The company has its headquarters in St. Vincent and Grenadines. Since it has just been set up, there have not been many customers reaching for this broker since it started. The binary broker still doesn’t show legitimacy as it is not under international regulation. The lack of licensing organization makes the broker’s platform skeptical about trading on.

Traders, however, have access to multiple trading access though the amount of trading assets does not match the amount of old and popular forex brokers. Traders can easily access trading assets thanks to the simple design of the trading platform. The platform is user-friendly, making it for users to understand.

Although Focus Option is a new broker, the platforms available are quite impressive, and the customer support comes in different ways, which will be looked at later in this article. Without further delay, let’s look at the features of this forex broker platform. Focus Option is more of a binary option and CFD-based trading platform.

Pros:

- The best thing about Focus Option is that it offers more than 80 cryptocurrencies

- Traders get to choose from multiple expiry time

- For correctly predicting the market, one can expect 95% payouts

- The trading account can be funded via Bitcoin and Crypto

- Withdrawal gets processed within 24 hours

- The minimum deposit is $10

Cons:

- The only disadvantage is that the binary options demo account is valid for 30 days.

(Risk warning: You capital can be at risk)

Is Focus Option regulated? Security of the broker

Regulation is an important evaluation criterion for any binary options broker. Unfortunately, Focus Option is not regulated, which is a disadvantage. Focus Option is a broker that just started in 2021 and does not yet have a financial licensing company supervising it. There is no guarantee that the traders’ funds are kept in a separate account from the broker.

However, we did the test. On Focus Option, all profits were paid out immediately and were in the withdrawal account after a short time. Other traders have also reported positive experiences with Focus Option. The trading platform is encrypted and does its best to prevent hacking and other types of scams.

Of course, the best thing that guarantees a trader is safe is if the broker has a license from international authorities such as CySEC, BaFin, FCA, and ASIC. Maybe in the future, the broker will be under proper regulation.

See all facts about the security:

| Regulation: | No |

| SSL: | Yes |

| Data protection: | Yes |

| 2-factor authentication: | Yes |

| Regulated payment methods: | Yes, available |

| Negative balance protection: | Yes |

Should you choose Focus Option? Overview of the trading offers and assets

Yes, you must choose Focus Option, as this new broker lets you trade crypto binary options and CFDs. You can choose from over 80 crypto option pairs, making them one of the best brokers in this space. In addition, traders also get competitive payouts and a user-friendly platform they can use without hassle.

One thing that makes Focus Option the absolute best in its demo account is that it comes with $10,000 dummy money. The demo account offers an opportunity to trade over 80 cryptocurrencies, including Litecoin, Ethereum, Bitcoin, and more.

In addition, the demo account also offers over 140 binary trading tools so that you can get a real-like experience. You can quickly withdraw the money without paying any commission on withdrawals or deposits. If you want to win the highest payout, you must only choose this platform as it offers up to 95% payout.

What’s interesting is that binary brokers offer satisfactory customer service and cryptocurrencies. The support team of this trading platform is always ready to help you so you do not get into any difficulty.

Quick facts about the offers of Focus Option:

| Minimum trade amount: | $1 |

| Trade types: | Binary Options, digital options |

| Expiration time: | 60 seconds up to 4 hours |

| Markets: | 120+ |

| Forex: | Yes |

| Commodities: | Yes |

| Indices: | Yes |

| Cryptocurrencies: | Yes |

| Stocks: | Yes |

| Maximum return per trade: | Up to 88%+ |

| Execution time: | 1 ms (no delays) |

(Risk warning: You capital can be at risk)

What makes Focus Option better?

As there are many other trading platforms, it’s common to ask what makes Focus Option a better choice. This trading broker is different from others as it is designed to help clients earn better payouts.

Focus Option is client-oriented that thinks more about the clients. Its secured trading system does not let any third-party steal the trader’s information. Also, the traders’ funds are safely kept without any risk of hackers stealing them.

Here’s what makes Focus Option a better option:

(Risk warning: You capital can be at risk)

1. Innovation

The highly-skilled team of Focus Option constantly works so it can offer new features for the platform. The continuous innovation makes this platform more diverse and easily accessible from any part of the world.

Traders who have registered themselves with Focus Option get an option to experience a better trading environment. This way, their portfolio gets better, and their trading skills are sharpened.

2. Integrity

Unlike many other trading platforms, the aim of Focus Option is to ensure its clients’ funds and interests are safeguarded. The platform does not want the traders to suffer any kind of loss.

Focus Option is committed to the practices and policies that are designed to benefit the traders. That means when you get yourself registered with this platform; you get to enjoy the best trading service.

3. Technology

The technology that Focus Option uses is responsive, unique, and user-friendly. The experts of this platform only include the most amazing technology. It is done so traders can use Focus Option for short and long-term trades.

4. Multiple expiry times

One thing that makes Focus Option a better choice is the multiple expiry times. When you trade through this platform, you get an option to choose from different expiry times, including 30 seconds, 1 minute, 2 minutes, 5 minutes, 15 minutes, 30 minutes, 1 hour, end of the day, and long-term.

5. Sentiment indicator

Focus Option allows you to use the sentiment indicator, which allows you to get an idea of currency high/low trade ratios. With the help of sentiment indicators, you can gain insight into the market’s mood.

6. Account levels

When registering with Focus Option, you can choose from different account types. The account type gives you the power to control your trading style and gain more market benefits.

7. One-click trading

Another unique aspect of Focus Option is that it allows one-click trading. That means you can execute the trade in just a single click while trading through this platform.

(Risk warning: You capital can be at risk)

8. Exceptional customer support

There is a possibility that you might encounter a tricky trading situation, or you come across something difficult to understand. In such a situation, you can take the help of Focus Option’s exceptional customer service.

The support team of this trading platform is determined to help you in any situation so you can get a better trading experience. Customer support of Focus Option is able to help traders of all experience levels and help them meet their trading goals.

9. Superior trading environment

One thing that makes Focus Option a true trading platform is its superior trading environment. The clients of this platform can enjoy professional trading conditions like low-latency connectivity and premium pricing.

10. Advanced trading platform

Lastly, Focus Option lets you take the help of technical analysis, chart layouts, and indicators. The platform offers you everything that you require to make profitable trades.

Security measures for traders and their money

As already seen above, the Focus Option is not yet under any form of regulation, and so it is not yet guaranteed that it is a safe broker for trading. There are a lot of forex brokers out there that are not legitimate. They are scams looking to steal from investors trading on the platform. However, this may not be the case for Focus Option because, since its creation date, there has not been any news of fraudulent activity on the broker.

Since the broker has no regulation, the trader’s account is unknown if it is kept in the same or a separate account from the broker. You can, however, check the broker’s legal terms on the official website for risks and necessary information about the broker’s legal standards.

Review of the different trading platforms of Focus Option:

Focus Option web trading

When you get yourself registered with Focus Option, the platform rewards you with the power to browse and operate your trading platform from anywhere. The web version of this trading platform can be quickly accessed without any trouble.

What’s better? Well, you do not have to download any additional binary software to use this web version. All you need is a stable connection, and you are ready to trade. The web version of this young trading platform is available in different languages, including Japanese, Portuguese, English, Malay, and Spanish.

(Risk warning: You capital can be at risk)

Focus Option mobile trading (app)

Focus Option wants its traders to easily tap into the market to keep themselves updated with important trading information. For this reason, it allows trading through mobile. Traders can easily navigate the mobile version of this trading platform.

Like the web version of this platform, the mobile version is also simple and free to use. You can quickly understand all the functions, and the binary trading app does not feel clunky. In addition, the mobile app lets you quickly check the transaction history without any issues.

The reliable mobile app can be quickly downloaded and used for smartphones, including iOS. Also, your funds are secured. That means you can trade without any worry.

You can easily keep a tab on the news and daily market analysis when you enter the market through the mobile or web platform. Keeping yourself updated on the news of large financial institutes and banks helps you make better trading decisions.

Mobile and web-version give you the power to initiate the trade without missing any profitable opportunities easily.

(Risk warning: You capital can be at risk)

How to trade on the Focus Option platform:

Trading on the platform is simple. Like any other broker, the trader will need to have a trading account on the broker’s platform. This will enable the client to be able to access the trading platform. As seen above, you can access the broker using your mobile device or desktop.

Once you have access to your trading platform, you can select any instrument and open the market with it. Select the asset, the amount you are willing to stake in the market, and the time you want to set your trading position. Remember that before trading on the platform, you should have the required money in your trading account. When you place your trade, keep a careful eye on it. Binary Option is a tricky market; an individual can lose all they have in a matter of minutes. To avoid such, make sure that you watch the market of whatever asset you are trading.

If you get your prediction right after the end of your staking period, you will receive a profit. But if your prediction is wrong, you will lose the money you used to place the trade. The trading platforms of Focus Option are user-friendly so that the traders can trade properly. The platform helps the traders to be properly focused while trading because there are no side attractions that may distract the trader.

Traders also have access to a practice account on the platform. This account has the number of assets that come with the live account. Traders can use the assets available on it to learn how to trade before coming to their live account. If you still find it hard to trade on the available platforms, you should contact customer support for proper assistance.

How to trade Forex on Focus Option

One of the most important things to do before trading forex is to check information and updates on the condition of the currency pair you want to invest in. The broker allows traders to select from over 20 forex currency pairs. When you select one, find how the market is doing on it from different blogs before trading on it. Once you have, then you can go ahead to place your trade.

If you want to place a trade for forex pairs on Focus Option, you have to have funds in your trading account. You will use this fund to set the amount you wish to stake. Set the time you want the trade to last. The focus option allows traders to hold long trading positions on the chart. Once you have set your position on the chart, the amount to stake, and the duration, you are good to go. Hit the confirm button to approve your decision.

Once you confirm the process, your trading time will last for how long you have set it. For example, if it’s 3 hours, it’s going to take 3 hours before your trade ends. Within this time, you can decide to bail out of the market if the trade is not going in your favor, or you can stick around and receive your reward if your prediction is right. Forex is that easy to trade on the platform. The practice account has forex available, so you can use the account to train yourself.

(Risk warning: You capital can be at risk)

How to trade binary options on Focus Option

Focus Option is well-known as an option-based company, which means that binary option is available on the platform. To perform binary option with any asset., select the amount you want to stake along with your trading position. As a trader, you will need to watch how the trade will make sure that it goes smoothly until the time elapses.

Depending on the market, you will need to decide whether to stay or end the trade. Usually, when it is going well, you’d stay and see your prediction to the end, but if not, you will have to abandon it before the time elapses. Leaving the trade before the time sometimes elapses results in not having a total loss on the amount you staked.

Traders are also allowed to trade binary options on the practice account. Traders can use the demo account to learn how binary option works and how to trade them on the Focus Option trading platform. Like the live account, traders can access the number of trading assets available on the platform. However, the demo account is nothing but a practice account, so a profit or a loss doesn’t matter.

How to trade cryptocurrencies on Focus Option

Crypto is one of the most important assets on Focus Option. Trading it on the broker’s platform can be done on both phones and desktops. Traders have access to quite some cryptocurrencies available on the platform. The trading approach towards crypto is like trading forex or binary option on the platform.

Decide on the coin or token you want to trade and conduct extensive research on the product. The research will help you know if the product is best for you to trade. After your research, you can return to the platform and do the normal process of placing a trade, that is, selecting a position on the chart, entering an amount you are willing to stake, and lastly, the duration of the trade.

Once you have confirmed the trade, check how the market is going from time to time. Whether you win or lose depends on if your prediction about the product is right or wrong. Traders who want to practice trading cryptocurrencies can do so with the demo account if they wish before doing so with their live account.

How to trade stocks on Focus Option

The stock market is only accessible to trade on the mobile application, but the process of investing in this asset is the same as other assets on the platform. Choose your preferred stock instrument and place a trade on it. The stock market is liable to changes, so be sure to check the market. Your prediction is what determines your win or loss. However, you can also help yourself make better predictions by conducting extensive research on the stock instrument you are trading.

The platform has a simple interface, so they can fully concentrate whenever traders place a trade on it. If you’re not holding a position for a long time, there is no need to take your eye out of it. This way, you know when to stop or continue holding the trading position.

(Risk warning: You capital can be at risk)

Review of Focus Option’s trading offers and conditions

The number of assets available on the platform is limited. However, traders still have many trading assets to choose from. The broker, however, has popular assets from traders can use to place trades. The assets available on the broker include Forex pairs, cryptocurrencies, and commodities. Let’s have better information on the different assets available on the Focus Option.

Forex

Traders can choose from the different currency pairs available on the platform. There are more than 40 forex assets for traders to place trades with. Some currency pairs include AUD/CAD, EUR/USD, GBP/CAD, e.t.c. The currency pairs have different spreads and leverages that are on them. Forex is a good asset to your portfolio because the market can be more easily monitored than other assets.

Cryptocurrency

Focus Option is more known because traders can trade cryptocurrencies on it. The broker offers traders a variety of cryptocurrencies to pick from. There are more than 20 cryptocurrencies available on Focus Option for traders to place a trade with on the platform. Some crypto coins are Bitcoin, Ethereum, Ripple, Bitcoin cash, e.t.c. There are also different spread pips and leverages available on the trading platform.

Commodities

Unlike most brokers, the commodity assets available on the platform are very low. This makes the broker platform unable to compete with leading broker platforms with more than 60 commodities available to them. Focus Option only has metals available on the platform. These two metals are gold and silver. However, both metals have high liquidity, which makes them good. Both also have different spreads and leverage them.

Stocks

Traders can trade stocks on the platform. However, this asset is only available for those who are making use of the mobile application. The stock market is a good market to open trade in, especially as a new trader. The market does not involve many risks, making it easy for traders to trade the asset.

Indices

This is another asset that can only be accessible if you use the mobile application platform. The indices available are not as many as leading forex companies, but they are sufficient. The broker has some of the best and most notable indices for traders to access. Before choosing any of them, ensure you’ve conducted proper findings about them.

Trading fees: How much does it cost to trade on Focus Option?

The trading fees on Focus Option are not as high as the fees for most existing platforms. The spread pip, however, varies from asset to asset. Traders are not charged for deposits or withdrawals that they make on the platform. This makes the broker welcoming, especially to new traders.

The spreads can go from 3 pip to 1 pip, depending on the account type and the asset you, as a trader, use to place your trade. Not much information is known about the internal trading fees of the platform since the broker does not stipulate it on its website. This makes it difficult for traders to know the exact amount of the trading fees.

(Risk warning: You capital can be at risk)

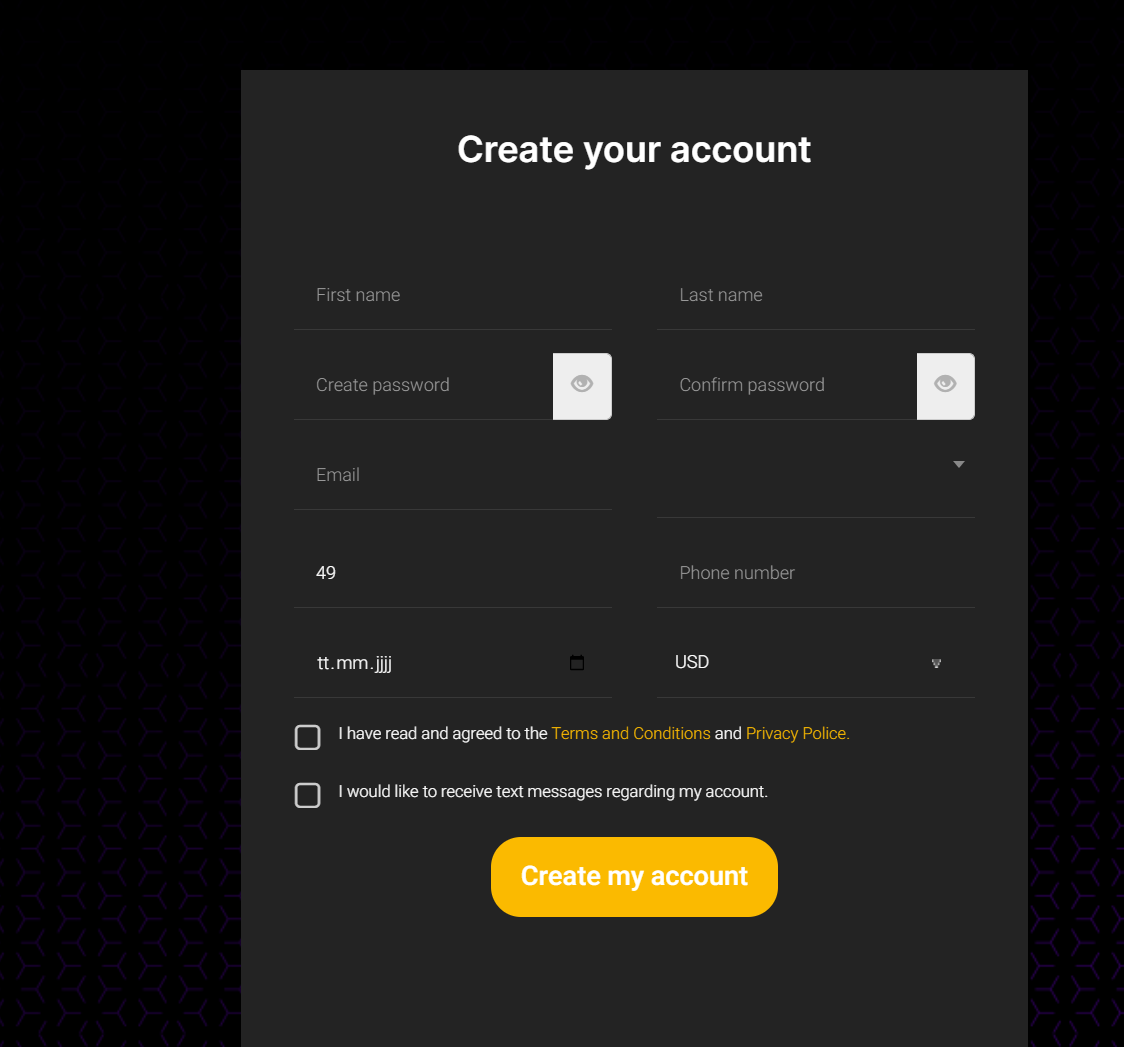

How to register yourself with Focus Option

If you want to enjoy the impressive trading tools of Focus Option, you must get yourself registered with this platform. But will you do that? You can do it by following the three simple steps.

(Risk warning: You capital can be at risk)

Step 1: Visit the website of this trading broker and click on the signup button. After that, you must enter the required details and choose the account type you want to start trading with. Make sure you fill in the correct information so your account can be quickly verified. If Focus Option can’t verify your information, it might cancel your account.

Step 2: After you have selected an account type, you must fund the account with the minimum deposit fee. Once the amount is successfully transferred, you can use your account. You can only use the payment methods to fund your account used by Focus Option.

Step 3: Once your account is set up, you can start your trading journey without any hassle. You simply have to predict whether the underlying asset’s price will go beyond or above the market price. For correctly predicting the market, you will be rewarded with highway payouts.

(Risk warning: You capital can be at risk)

Different account types on Focus Option

Each trader should have the flexibility to use different trading accounts as their goals differ. Some traders simply want to learn the basics of binary options trading, some want to improve their skills, and some want to build a career.

Fortunately, Focus Option understands this thing. That’s why it offers different trading accounts. These accounts have different features, and their minimum deposit amount also differs.

Below are four account types that Focus Option offers for its traders.

1. Bronze account

This trading account type is designed for beginners new to the trading market. The minimum deposit amount for this account is $10. After you have successfully deposited the money through the accepted payment method, you can easily start trading.

Before starting your trade, it’s advised to practice in the demo account. This way, you can get an idea of real trading, and your chances of losing money would be less. With this account type, you can expect 24/5 smooth customer support and an option to trade using 140 instruments. It includes technical analysis, indicators, and a multi-chart layout.

(Risk warning: You capital can be at risk)

2. Silver account

A silver account is recommended for traders who have some trading experience. The minimum deposit amount for this trading account type is more than the earlier one. However, you also get to enjoy more features and benefits.

Along with 24/5 customer support and technical instruments, you can also experience accelerated fiat instruments. Furthermore, you can enjoy higher investment limits and unlock better payouts per trade.

3. Gold account

Next is the Gold account savvy traders use to take their trading skills to the next level. What’s interesting about this account type is that it offers all the benefits of the previous two account types and more.

One of the two additional features you get is trading signals and market analysis. The Gold account of Focus Option gives you an opportunity to access exclusive trade signals and market analysis.

You also get a personal account manager. So, you can have your questions answered through the account manager and enjoy a smooth trading journey. The minimum deposit amount for this account is more than the Silver account.

4. Platinum account

The last one is the Platinum account, which mostly expert traders use who want to bring a strong trading career. By paying the minimum deposit fees, you can enjoy two additional features: the highest investment and exclusive VIP events.

The platinum account lets you earn up to 95% payouts for correctly predicting the asset’s price. You can also enjoy exclusive VIP client events to sharpen your trading skills.

Can you use a demo account on Focus Option?

Of course, traders can use a demo account on Focus Option’s trading platform. The broker provides a demo account that is already funded for $10000. However, the money in the account is not real and cannot be withdrawn.

The money in the demo account is supposed to help you start trading on the platform. The demo account is a practice account that will help you, as a new trader, get familiar with the trading environment of Focus Option. The demo account is not everlasting and will expire after 30 days of account creation.

(Risk warning: You capital can be at risk)

How to login into your Focus Option trading account

When you want to login into your trading account, select the trade button still on the right side of the page. If you are using mobile software, it is still possible to login into your trading account. To log in, the trader will enter the email address used to create the account first and the password used to create the account.

If the account details are correct, you will immediately have access to your trading account to start trading. However, if the account details are wrong, you can retrieve your trading account and start trading with your account. The login process of Focus Option can be done without stress.

Verification – What do you need, and how long does it take?

The verification process is the same as other brokers. The broker requires the traders to submit government-acknowledged proof of identification and residency. For proof of identification, traders must submit their National Identification Card, National Passport, or Driver’s license to verify their name, age, nationality, etc.

For proof of residency, it’s just to show that you indeed stay in the country you claim to live in. For this document, traders can drop a utility bill. The verification process should take up to 24 hours. Make sure the documents are authentic; if not, your account will not be opened.

Deposit and withdrawals on Focus Option

You can quickly deposit and withdraw money from your Focus Options trading account. This trading platform accepts a variety of payment methods so that you can deposit money from any part of the country.

Depending on the payment type, the amount can either be processed instantly or take 1 to 3 days. Also, when you request a withdrawal, you will receive the money through the mode you have used for depositing.

(Risk warning: You capital can be at risk)

Deposit methods:

The minimum deposit on Focus Option is $ 10. You do not pay any fees.

- Credit cards (MasterCard, Visa Card)

- Debit cards

- Cryptocurrencies (Bitcoin, USDT, and more)

- Bank transfers

- Local payment methods

- Electronic wallets

Note that the payment methods are depending on your country of residence.

Withdrawal methods:

The minimum withdrawal is $ 10 on Focus Option. For bank transfers, you have to withdraw at least $ 100. There are no fees.

- Credit cards (MasterCard, Visa Card)

- Debit cards

- Cryptocurrencies (Bitcoin, USDT, and more)

- Bank transfers

- Local payment methods

- Electronic wallets

How to deposit money – The minimum deposit explained

Once you have logged in to your account or created your trading account, you will be able to fund your account, so you can start trading. To fund your trading account, click on the deposit option. When you do, you will see the different payment methods to pick from, choose the one that suits you best, and then enter the amount you wish to trade with.

Remember that you cannot make deposits below $10 on the broker’s platform. Once you have funded your account, you can trade as you wish. Funding your trading account on Focus Option is free.

Deposit bonuses

There are no deposit bonuses on Focus Option. The broker does not advertise any bonus for first or consequent deposits that traders may make.

Withdrawal review – How to withdraw your money on Focus Option

To withdraw money, make sure you are logged into your trading account. Click on the withdraw option. Once you click on it, you will see the different payment options available on the platform for withdrawal. Select one and enter the amount you wish to withdraw.

There is, however, a minimum amount that you can withdraw from. Just like deposits, Focus Option traders are not charged for wanting to make withdrawals from their trading accounts. It takes at least 24 hours for your withdrawal process to be complete.

Customer support for Traders

The broker operates a customer support system that operates 24/5. They have different support for traders. The first is the FAQ section. Though the FAQ section is good, some answers still need to be answered on them. But traders can get a handful of information from the FAQ page.

Aside from the FAQ, there is a call center, live chat, and email address that traders can use to get answers to their needed questions. They also have a Whatsapp number that traders can contact.

Contact Information

- Phone number – (+(62)21 2002-2012)

- Email address – [email protected]

- Website – www.focusoption.com/contact-us/

| Supported languages: | More than 5 offered |

| Live-Chat | 24/5 |

| Email: | [email protected] |

| Phone support: | +(62)21 2002-2012 |

(Risk warning: You capital can be at risk)

Educational material – How to learn trading with Focus Option

There are no educational materials on this broker. There are no courses, webinars, or seminars that this broker organizes. This could be because they are a new broker and are still trying to start up, but yes, there are no educational materials.

Additional Fees

Whether or not there are additional fees is not stipulated by the broker. For this reason, one cannot know if there are swap fees and the rest.

Available countries and forbidden countries

The broker is available in the following countries:

- UK

- Japan

- Kenya

- Nigeria

- Australia

The forbidden countries are currently unknown, but it is most possible that they are regions where traders cannot join this trading platform.

Comparison of Focus Option with other binary brokers

If you are looking for an excellent binary broker, Focus Option is a must. We have given the platform a full 5 out of 5 stars because of its unique user interface and the many features it has to offer. It has an innovative trading concept that has been well-received by traders. A special function of Focus Option is that it allows one-click trading. This means that when you trade on this platform, you can execute the trade with a single click.

Unfortunately, there is no bonus available on the platform. The high yield of up to 88% makes up for this drawback. If you are looking for a reliable and good binary broker with many features, you will love Focus Option.

| 1. Focus Option | 2. Olymp Trade | 3. IQ Option | |

|---|---|---|---|

| Rating: | 5/5 | 5/5 | 5/5 |

| Regulation: | / | International Financial Commission | / |

| Digital Options: | Yes | Yes | Yes |

| Return: | Up to 88%+ | Up to 90%+ | Up to 100%+ |

| Assets: | 120+ | 100+ | 300+ |

| Support: | 24/5 | 24/7 | 24/7 |

| Advantages: | Offers One-click trading | 100% bonus available | Offers CFD and forex trading as well |

| Disadvantages: | No bonus available | Not the highest return | Not available in every country |

| ➔ Sign up with Focus Option | ➔ Visit the Olymp Trade review | ➔ Visit the IQ Option review |

Conclusion: Focus Option is a new broker, and no scam detected

Focus Option is an excellent trading platform that you can use for safely entering the trading market. Also, you can choose one of the accounts from the four options that meets your requirements.

This Binary Options broker is very new and just started in 2021. So far we could not detect any scams or fraud. There are multiple online reviews and experiences by users who give this platform 5 stars and recommend it.

(Risk warning: You capital can be at risk)

FAQ – most asked questions: about Focus Option:

Is Focus Option safe?

The broker cannot be said to be safe since there is no regulatory organization guiding the platform. This makes it incredibly risky for those who want to trade on it. The only good thing so far is that Focus Option has not been reported for any case of trader’s financial abuse.

Is copy trading possible with this broker?

No, copy trading is not possible. Traders cannot copy another trader’s trading technique and place a trade based on the technique. Every trader must have a trading strategy to succeed on the platform.

Are the bonuses on Focus Option?

Focus Option does not advertise any bonus for the traders. This may mean that there is no bonus available on the platform.

Is Focus Option a good broker?

Based on our experience, we can say that Focus Option is a good broker. Although the platform has only been on the market since 2021, it offers attractive trading conditions with low commissions and raw spreads. Withdrawal requests are processed quickly and there are many financial assets available, including over 80 cryptocurrencies. The platform performs very well in our comparison, which is why we see Focus Option as an excellent broker.

What is the minimum deposit on Focus Option?

The minimum deposit on Focus Option is $10.

Is Focus Option regulated?

No, Focus Option is not regulated. However, this does not mean that it is a scam.

From our experience, the platform pays out the profits reliably and on time. Therefore, it can be considered safe overall. Since the platform was only established in 2021, there is a good chance we may see the platform being under good regulation in the future.