Binary Options apps enable traders to trade on the go via their mobile phones and make quick decisions for their portfolios. However, finding a Binary Options brokerage offering a professional mobile phone trading application can be challenging.

In this article, we compared the best mobile apps for binary trading in detail!

These are the top 7 Binary Options apps for Android and iOS:

- Pocket Option – The best app for any device with the highest return

- IQ Option – Best user-friendly app for beginners

- Quotex – Free signals via the app

- Deriv – Supports multiple devices and automated trading

- Olymp Trade – Good education and different account types

- Exnova – New binary trading app with high return

- Expert Option – Good option for short-term trading

The best 7 Binary Options Trading apps reviewed:

Binary App:

Availability:

Return:

Download:

Android, iOS, PC, web

98%+

(Risk warning: Your capital can be at risk)

Android, iOS, PC, web

98%+

(Risk warning: Your capital can be at risk)

Android, iOS, web

95%+

(Risk warning: Your capital can be at risk)

Android, iOS, PC, web

95%+

(Risk warning: Your capital can be at risk)

Android, iOS, web

95%+

(Risk warning: Your capital can be at risk)

Android, iOS, PC, web

94%+

(Risk warning: Your capital can be at risk)

Android, iOS, web

90%+

(Risk warning: Your capital can be at risk)

Reviews of the best binary trading apps:

To compare the apps fairly, you will need to shortlist the top contenders, download every application, and compare their features.

Lucky for you, you found our article on Binaryoptions.com. We’ve saved you the trouble and reviewed the 7 best binary options trading applications available in the industry. With our selection guide and comparison, you will have found the right application to trade binaries by the end of this post.

General Risk Warning: The products listed in this post carry high levels of risk and can lead to loss of funds.

(Risk warning: Your capital can be at risk)

1. Pocket Option app – The best app for any device with the highest return

Pocket Option is your go-to choice if you are looking for the top binary trading app with the highest return, that is available on any device! Let us take a closer look at the facts.

Pocket Option is known as one of the most reliable brokers for binary options traders. It was established fairly recently in 2017 but has garnered a base of over 20,000 daily users. The broker is available to traders in more than 95 countries and has worldwide coverage in App- and Play stores. No matter where you come from, you can create a Pocket Option account without any problems.

The regulatory oversight of the IFMRRC leaves no reason to worry about the broker’s trustworthiness. The platform offers over 130 tradable assets, including those from forex, indices, stocks, commodities, and cryptocurrency markets. Therefore, this broker is the clear winner in this comparison.

(Risk warning: Your capital can be at risk)

The trading experience

Pocket Option stands out from the competition by offering MetaTrader 4 and 5 applications for traders on desktops. The commercial applications are accessible as browsers and desktop apps. MT4 and MT5 come loaded with several technical indicators and all the charting tools a professional may need.

Traders can use the API to program automated trades, make a signal bot, or use the copy trading features Pocket Option offers. It also makes a specialized trading keyboard available to traders.

Pocket Option also offers a simpler web-based interface that makes it easy for new binary options traders to approach trading.

However, this platform does not have features like price alerts and does not offer two-factor authentication without Google Authenticator or its alternatives.

The basic trade types and a reasonable range of expiry times make using Pocket Option strategies suitable for both novice and seasoned traders. The availability of the 30-second expiry time allows traders to explore the potential of quick trading.

Traders get between 80% and 100% payouts on winning trades, and sometimes more.

The excellent social trading, tournaments, and achievement features, coupled with its compliance with the Anti-Money Laundering Policy, make it an ideal option for traders looking for a reliable, feature-rich brokerage.

(Risk warning: Your capital can be at risk)

Mobile app review:

Pocket Option’s Android and iOS apps are based on their own developed application, the MetaTrader 4, and the MetaTrader 5. As a result, the apps feature all the tools available on the desktop and web versions of the platform.

All five trading orders are available on the mobile apps, and the app also features the same technical and charting tools as its desktop counterparts.

While the interface is essentially a shrunk-down copy of its counterparts, the large buttons and simple user experience often make it easier to use than the other versions.

Furthermore, the mobile platform enables the applications to provide trading alerts and market updates as dedicated notifications. Pocket Option’s apps are available in several languages, making them easier to use for traders worldwide.

However, mobile apps have some limitations. Your account is protected by only one authentication method – your ID and password – on the apps. The broker has yet to provide two-factor authentication features on the applications.

This also means that traders cannot use facial or fingerprint recognition to secure their accounts.

On the plus side, the application is free and easy to use, making it a great choice in an app niche where good apps are few and far between.

Pros

- Over 100 financial instruments

- Available to all traders

- No commissions

- Custom technical indicators

- App available in several languages

- Highest returns

- Crypto deposits & withdrawals

- User-friendly

Cons

- Not all indicators are available

- A $ 1,000 Pocket Option deposit is required for using MetaTrader 5 and MetaTrader 4

(Risk warning: Your capital can be at risk)

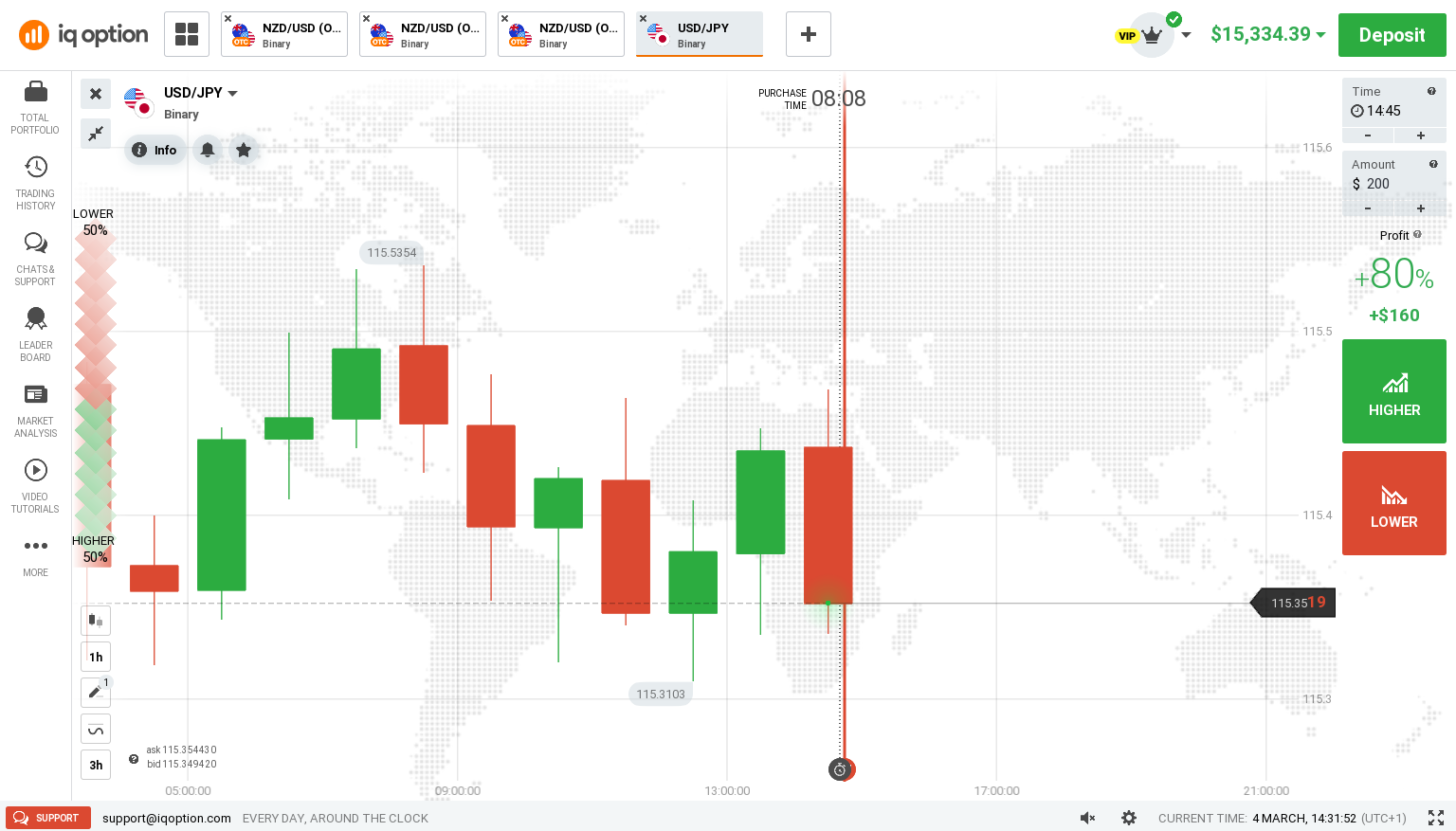

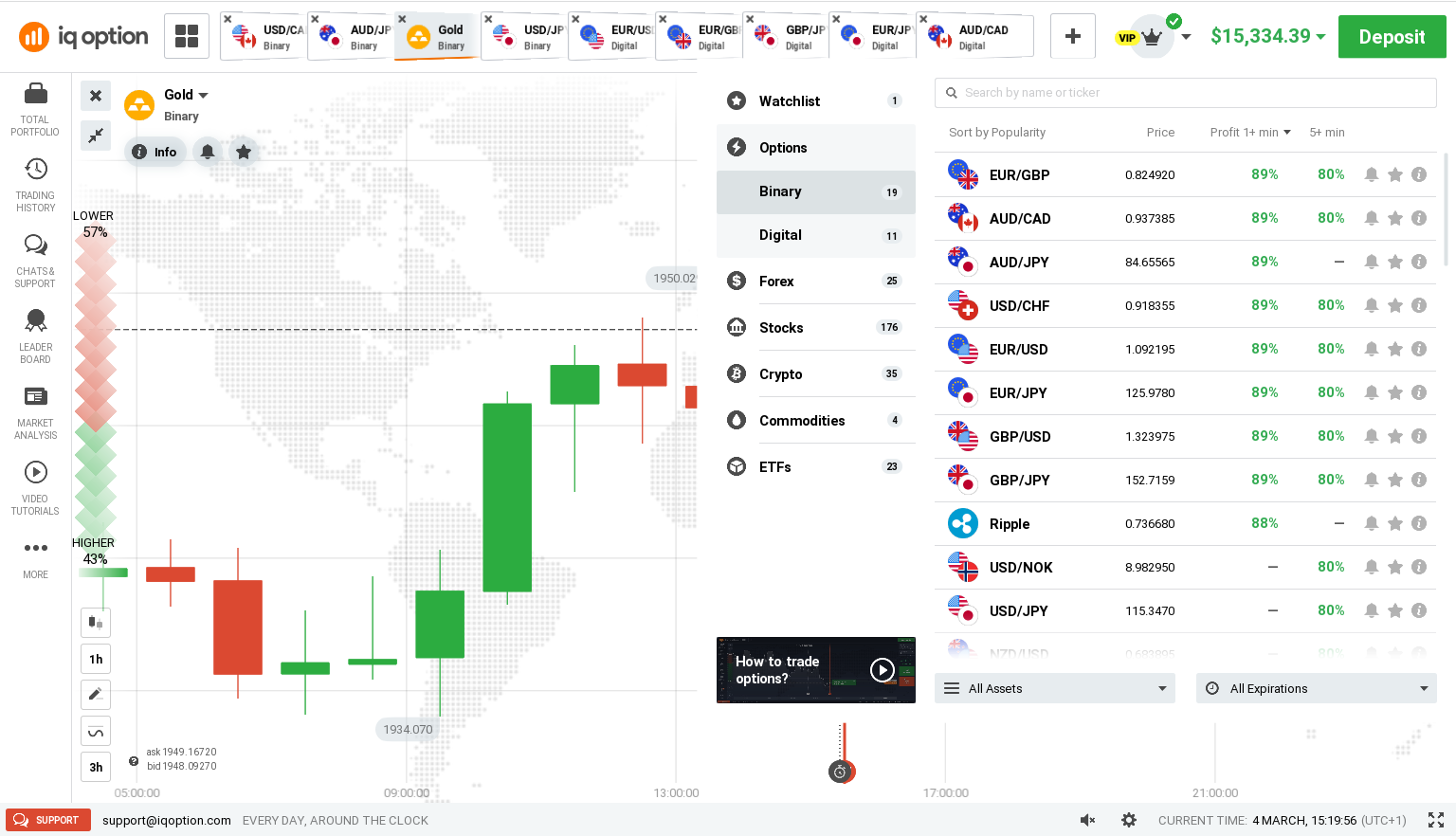

2. IQ Option app – Best user-friendly app for beginners

If you are looking for a broker with a low minimum deposit and a good trading experience, this one is the best choice for you. IQ Option offers CFDs, forex, binary option, and cryptocurrency trading for traders from all around the world and is the best trading app for beginners. The broad gamut of markets makes it among the most impressive binary options brokers for traders of all skill levels.

The brokerage steadily broadened its user base since it was founded in 2013 and now stands out as one of the market’s leading binary options brokers. With over 48 million registered users, the company facilitates over 1 million transactions every day.

It is available to traders in 213 countries, including the ability to trade with IQ Option in India, Malaysia, South Africa, Singapore, and some more countries. However, the US version of IQ Option is not available. Furthermore, you can only use a restricted version if you are in EEA countries.

(Risk warning: Your capital can be at risk)

The trading experience

Unlike many other brokerages, IQ Option offers a proprietary interface that features a modern, no-nonsense design.

The platform equips traders with tools such as multi-chart layouts, historical quotes, market updates and alerts, economic calendars, and more. In addition, widgets on the platform can help traders make quick decisions. The interface is available in 13 languages.

Further, the broker also has an Investor’s Compensation Fund. It provides IQ Option protection if it cannot fulfill financial obligations. Traders on the platform are hence protected by up to €20,000 in the event of security breaches.

The broker offers traders up to 90% payout on winning trades.

The platform’s features make it possible for traders of all skill levels to make quick yet informed trades without much hassle.

Features such as the news feed and community chat make the platform attractive, and since several regulatory authorities oversee it, it’s the go-to option for many traders.

(Risk warning: Your capital can be at risk)

Mobile app review

While the browser version of the platform is impressive on its own, the seamless mobile user experience sets IQ Option apart from many other brokers. The trading area is unchanged in the mobile apps, and the platform comes with buttons that match the look and feel of the website.

The company also provides an application for Windows computers with the same seamless interface as the browser version.

The IQ Option app is available on both Google Play Store and the App Store on iOS. Taking on from the full website version, the mobile app provides the same neat and intuitive interface.

Further, it provides traders with all the same tools as the website, making for unhindered trading on the go.

The best thing about mobile applications is that they boast a native design. The Android app is written to work flawlessly on Android phones, and the iOS app is tailored to run smoothly on iOS.

Natively designed applications rarely lag or crash. The IQ Option app will rarely, if ever, give you trouble when you’re trying to place a trade order.

What’s more, IQ Option swiftly updated its iOS application to meet Apple’s new requirements for binary options trading apps. The move shows the broker’s commitment to up keeping the high standards it has set.

Pros

- 15 technical indicators for spotting trends

- Five charting tools

- Two account types

- Free IQ Option demo account

- CFDs also available

Cons

- Unavailable to US traders

- High leverages not available to non-professional traders

- Fixed spread accounts

(Risk warning: Your capital can be at risk)

3. Quotex app – Free signals via the app

Do you like to trade based on signals and real-time data? Then, consider choosing Quotex as your go-to binary options app. In fact, Quotex is one of the newer brokers in the market. The company was licensed in November 2020. But do not let that hold you back. However, its robust feature set attracted thousands of traders from the world over in a short period. It has become a reputable broker in the binary options trading industry.

Quotex is owned by Seychelles-based Awesomo Ltd. and is under the regulatory oversight of the IFMRRC. It accepts traders from the US, Canada, and Hong Kong. However, payment options in these countries are limited to cryptocurrency.

The broker offers its traders Qoutex deposit bonuses depending on the Quotex deposit amounts. Traders get a 20%, 25%, 30%, and 35% bonus when they deposit upwards of $50, $100, $150, and $300, respectively. Utilizing this offer can boost trading capital.

The offer above is one of the many promotions the broker offers to traders. You can expect perks like reduced risk and cashback regularly. In addition, active traders are awarded promo codes that they can use for discounts across the firm.

(Risk warning: Your capital can be at risk)

The trading experience

Its trading platform boasts a feature-loaded interface that is suitable for professional traders. You can use the platform from your browser – no downloads are required.

One of the best things about the interface is that features are organized for convenient access. You will never have trouble finding an option, which makes quick trading much less of a hassle.

Additionally, the diverse set of expiry durations ranging from one minute to months make it suitable for traders having any trading style.

With over 410 assets for digital options, 27 forex assets, 15 indices, and several cryptocurrencies and commodities, Quotex.io offers the largest asset variety of all brokers.

(Risk warning: Your capital can be at risk)

Mobile app review

Quotex.io leaves a huge chunk of the market untouched since it only offers an app on the Android platform. Nonetheless, traders can access the web version of the platform from the web browser on any phone.

This allows Quotex.io users to execute and track their traders from anywhere, at any time.

The platform comes with an integrated signal provider that boasts an accuracy rate of 87%. As a result, you can use the signals to create strategies and make money in markets with which you don’t have much experience.

One of the best things about Quotex.io’s platform is the highly responsive chart. You never have to worry about losing out on trading opportunities due to a slow platform.

The ordering screens are available on the right side of the chart, which makes placing orders and tracking trades easy. Furthermore, the chart is highly customizable, ensuring that every trader gets the convenience they want out of the platform.

The several trading indicators on the platform enable you to fund your trading account with ease. In addition, each of the indicators on the platform can be personalized and fine-tuned for optimal use.

Small conveniences like getting access to market news on your live account make the Quotex.io mobile app a viable option for many traders.

Pros

- No commissions

- Copy trading features

- Weekend trading

- Zero withdrawal fees on Quotex

- Free Quotex demo account

Cons

- Broker does not offer leverage

- Limited Quotex payment methods in some countries

(Risk warning: Your capital can be at risk)

4. Deriv app – Supports multiple devices and automated trading

If you are looking for a broker that offers not only a good app but also numerous trading platforms, then Deriv is the right choice for you. The big advantage of this broker is that it offers extremely high payouts of up to 90%! This is a massive return, especially when compared to many other binary options brokers that have payouts of 60-70%. This means, in simple words, more profit for you!

Furthermore, Deriv’s security standards are excellent. The application is highly regulated, giving you the safest trading experience possible. You can trade without having to worry about fraudulent activity. Deriv is guaranteed not to be a scam, but a reputable broker. It is trusted by many traders around the world. Your Deriv deposits are safe, the site is SSL encrypted and there is negative balance protection.

(Risk warning: Your capital can be at risk)

Trading experience

With automated trading, you can make use of accurate algorithms. This broker allows you to trade binary options from the comfort of your mobile phone, having a strong robot on your side that gives you recommendations and predictions. Simply pull out your mobile phone, follow the latest market developments and spot opportunities as they arise. You can also set up signals on your mobile phone for a superior trading experience.

Trading on Deriv is available 24/7. No matter when you want to trade! Day or night.

Besides, there is a free Deriv demo account. You can practice trading without having to invest a single penny. That’s what we call a modern broker!

Mobile app review

With only around 100 tradable assets, there are comparatively few instruments to trade on the Deriv app. But we can tell you from experience that the most important assets are available, including Forex, OTC assets, commodities, and stocks. You may find what you need here.

Overall, the Deriv app is easy to use and highly recommended. There are no technical difficulties or server outages. You can expect a good trading experience without having to worry about lags or inaccurate data. Interestingly, the reviews of the Deriv app say the same. They are comparatively positive.

These are all points that indicate that you can do nothing wrong with Deriv and make a solid profit. Happy binary trading!

Pros

- Trusted by many traders around the world

- Highly secure

- Numerous assets available

- They have various platforms to choose from

- The app is user-friendly

- No hidden fees

Cons

- Not available in every country

- The leverage is not the highest

(Risk warning: Your capital can be at risk)

5. Olymp Trade app – Good education and different account types

Smart traders choose Olymp Trade. It has one of the best educational programs. This platform is suitable for those who want to take binary options trading seriously and learn all the different strategies available. It is for those who are dedicated and want to keep the money coming into their bank account.

Olymp Trade will teach you everything you need to know about professional binary trading, from different pattern formations to complex strategies that only a few people in the world use. This knowledge will give you real insight into market movements. It allows you to make logical predictions.

With various users, Olymp Trade has established itself as one of the leading binary options brokerages in the industry. It serves traders in several countries, including Brazil, Turkey, and India. It is also known as the best Binary App in India.

In fact, Olymp Trade is one of the most regulated brokerages. It is regulated by the International Financial Commission, which is known for its excellent security standards.

Strict regulation, independent certification, and regular third-party audits demonstrate the broker’s commitment to quality service. Besides, the yields are high! That’s what we call a “profit machine”!

(Risk warning: Your capital can be at risk)

The trading experience

At Olymp Trade, you can expect to have a superb trading experience. There are no hidden costs, extremely high profits of up to 92% possible, and you can open a free demo account.

The high-security standards also speak for themselves. There is a compensation of up to $20,000 for unforeseen events if the platform is at fault, which means you can trade absolutely safely without fear of the platform making a mistake. There are different types of accounts, all of which can be tailored to your individual needs.

The proprietary trading platform uses the SSL protocol to ensure that trader data is always encrypted. As a result, your bank information, personal data, and funds remain safe during Olymp Trade withdrawals and Olymp Trade deposits.

Unfortunately, the platform does not offer a wide range of trade types. You can only make standard call/put trades and short turbo trades on the platform. While these trade types make it easy for traders to make trading decisions, experienced traders may find that the lack of trade types limits their trading.

However, they offer non-stop trading, a feature not offered by many brokers. You can trade at night or on weekends without restriction, making Olymp Trade an excellent option for casual and professional traders from all over the world.

Mobile app review

The Olymp Trade trading app is available for download on both Android and iOS phones. It is one of the most impressive binary options trading apps, offering numerous charting tools.

You can set the application to notify you of market trends and order updates. The app comes with economic calendars, and you can use independent tabs with these charts. You will also receive promotional information and transaction details as push notifications on your mobile phone.

Keep in mind that the app does not have all the features of the browser version of the platform. So if you’re looking for an in-depth analysis of markets and positions on the move, the Olymp Trade app is not for you.

However, you will find all the essential features you need in the app. There are many scalable features on the platform and the app allows you to trade with one click without confirmation.

The app also features a chart with a fast refresh rate, allowing you to take advantage of profit opportunities as they arise.

The theme of the mobile apps is designed to match the browser platform. This makes the transition from desktop to mobile a seamless experience.

Therefore, you’re looking for an easy-to-use app that doesn’t complicate binary options trading, the Olymp Trade binary options app is for you.

Pros

- Weekend trading available

- High payout

- Tournaments with cash prizes

- Robust Olymp Trade demo account

Cons

- No social trading features

- Broker does not offer trading signals

- Not available for traders in the US and EEA

(Risk warning: Your capital can be at risk)

6. Exnova app – New binary trading app with high return

A relatively new broker that is shaking up the market is Exnova. This binary app has it all. Trading fees are very low as you only need to make a small Exnova deposit of ten dollars to start trading binary options with real money. Both beginners and professional traders have the option of signing up with Exnova for free and opening a demo account.

At Exnova you get a top-notch mobile app that allows you to trade on the go. On the bus, on the train, at school, or during your lunch break. Exnova has a good app that allows you to follow what’s going on in the markets. So, you can see what’s going on in the markets from almost anywhere. Good opportunities arise spontaneously from time to time. With this broker, you can take advantage of them. The trading experience on the Exnova app is just as good as the web version.

(Risk warning: Your capital can be at risk)

The trading experience

Exnova provides you with helpful trading courses where you can practice binary trading like a real pro. You also have access to in-depth video tutorials and strategies that you can watch online. As you can see, they care about the success of their community.

When it comes to charting, Exnova offers you endless possibilities. There are many analysis options and different chart types available, including the popular Candlestick and Heikan-Ashi charts.

Their binary options trading application is available to you after a successful Exnova verification and allows you to choose from a variety of trading instruments. Forex, commodities, stocks, indices, or OTC assets. – Exnova has you covered. They provide their traders with all they need to make big profits.

As binary options trades are usually executed rapidly, it is even more convenient to be able to execute these trades on the go. Simply pull out your mobile phone and quickly open a binary options trade. Exnova gives you the ability to do this without having to sit in front of your computer all day. Download the Exnova app and try it out!

(Risk warning: Your capital can be at risk)

Mobile app review

You can access the Exnova download page on their website. Here, you can select the Exnova app.

The best thing about the Exnova app is that it is effortless to use. Its structure is intuitive, even for beginners. There is nothing to confuse you. Instead, you get all the important metrics at a glance, so you can immediately choose to open the trade of your choice. However, for those who like to be very precise, there are many analysis tools and trading indicators available.

Most importantly, the Exnova app is entirely free. There are no additional fees compared to the web version. This makes it one of our absolute favorites when it comes to trading binary options on the go.

Pros

- Great for beginners

- High payout

- No additional fees

- Free demo account

- Secure binary options app

Cons

- Not accessible in every country

- Not all indicators are available

(Risk warning: Your capital can be at risk)

7. Expert Option app – Good option for short-term trading

Do you like short-term trading? Then this application is just right for you. Expert Option was founded in 2014 and over the years, the broker has garnered a massive user base of 13 million traders globally. The brokerage has over 100 account managers for handling clients and offers over 100 tradable assets.

It is perfect for those who like to make quick profits without having to worry about the big picture. Traders can trade binaries on currencies, stocks, commodities, and cryptocurrencies on the platform. The VFSC regulates the brokerage since the company is established in Port Vila, Vanuatu.

The trading experience

The broker offers several features that set it apart from other well-known brokerages. Its excellent social trading features allow traders to connect with friends and follow the top traders on the platform. You can see what assets another trader is trading on which markets and how much profit they’re making.

The several technical analysis tools, four chart types, eight indicators, and several trend lines enable you to make sensible trading decisions.

The daily market analysis will keep you in the loop about every market’s movement.

If you’re new to trading binaries, Expert Option offers a plethora of educational resources that you can use to learn to trade.

Another impressive feature of the platform is the trading signals it offers. Traders that sign up with Expert Option receive a steady supply of trading signals that can help them turn a quick profit.

Expert Option indicates the strength of the signal it supplies. You will receive “Strong Buy,” “Buy,” “Neutral,” “Strong Sell” and “Sell” alerts that you can utilize to make profits.

(Risk warning: Your capital can be at risk)

Mobile app review

Expert Option makes it easy for you to trade online with the web browser version of the platform. You do not have to download any software to trade.

However, the trading platform is also accessible via desktop and mobile apps. You can install the Expert Option trading app on Android and iOS phones and Macs and Windows computers.

The proprietary platform provides near-instantaneous execution of trades and offers accurate price feeds. These are only a few of the many advantages that come with a proprietary platform.

The biggest focus of the interface across devices is the graph in the center. However, you have the flexibility of customizing the chart layout as you see fit. One of the many ways you can customize the layout is by splitting the chart to show two markets simultaneously.

The mobile apps feature the same indicators, chart types, and other trading tools as the desktop and browser versions of the platform. So if you want a binary options trading app that offers a no-compromise trading experience, the Expert Option app will not fail to impress you.

Pros

- Up to 90% payout

- Free Expert Option demo account

- Hundreds of assets

- Available in 15 languages

- Short-term trading

Cons

- Not available in the US and EEA

- Complicated first-time Expert Option withdrawals

(Risk warning: Your capital can be at risk)

How to pick the best binary options mobile app

There are some significant differences between the apps of the top five brokerages in the industry. These differences can make it challenging to find a trading app that meets all the trading needs you want to be met.

Learning about the factors that can make a difference in your trading experience can help you make a clear decision and find the right app.

Here is a list of things you must consider before choosing a binary options trading app:

#1 Compatibility

By convention, binary options brokerages enable traders to use their platform via their web browsers. While there are brokers that have apps for mobile phones, you must ensure that the application is available on your phone’s OS.

Some brokers offer apps on only the Google Play Store. Other brokers only have an app for traders using iPhones.

The majority of brokerages that offer mobile apps offer them on both operating systems. That said, doing your due diligence and ensuring app availability is crucial before signing up.

#2 Security

Having funds in a trading account that you’re not sure is secure can take away your peace of mind. On the other hand, if you know that your trading app is secure, you won’t have to think twice when trading from your phone.

One of the best ways to ensure that your funds are safe is to sign up with a broker that is a member of the SIPC. The non-profit organization protects investor funds, compensating traders if the brokerage isn’t financially capable after a security breach.

Besides checking for a SIPC membership, look for two-factor authentication and encryption algorithms. These are some effective security measures that have become commonplace in the industry.

(Risk warning: Your capital can be at risk)

#3 User-friendliness

The best trading conditions are not necessary if you do not know how to use the platform. Therefore, pay special attention to usability.

Trading binary options can be stressful. Seconds can mean the difference between success and failure. Hence, the app should not be designed to increase your stress level but to give you maximum comfort. You need to keep a cool head to make big profits, no matter how fast you need to react.

Therefore, we recommend choosing a binary options app you like, and one you feel good trading on. Especially if you are serious about trading binary options, you will spend a lot of time looking for suitable opportunities in the app. Make sure you like the interface. Customize it if necessary. Overall, there should be easy navigation and a variety of analysis options. This will contribute to a good user-friendliness.

#4 Execution speed

The execution speed for trades is crucial for successful trading. Often, the execution speed is slower when using a mobile app for trading than when using a desktop PC. Before you deposit real money, you should check out the execution speed via the demo account. After that, we recommend starting with small amounts to check the execution speed in live trading.

#5 Low minimum deposit

Some binary options apps seem to offer good conditions, but it turns out that the minimum deposit is very high. Especially as a beginner, you should not be put off by this. There are plenty of binary options apps that offer minimum deposits as low as ten US dollars. It is much better to start with a low minimum deposit as this will allow you to practice trading. Once you feel confident, you should be free to decide whether you want to invest more money or not.

Make sure you choose the right trading platform with a low minimum deposit. This is often a sign of a reputable broker. Reputable brokers give you the freedom to choose how much you want to deposit.

(Risk warning: Your capital can be at risk)

#6 Available Assets

Access to a high number of assets gives you more opportunities to make winning trades. Different brokers offer a different number of assets, and some brokers only offer assets from specific markets. Having access to more markets allows you to diversify your portfolio and manage risk.

In addition to ensuring that the broker offers assets in the markets you usually trade-in, you must also ensure the broker offers the trade types you use.

Every broker offers call and put options. However, you may be interested in using other trade types such as one- and two-touch options.

#7 Demo Account

One of the biggest selling points of mobile trading applications is the availability of a demo account.

If your trading app has a demo account, you will be able to test out and practice trading strategies when you’re on the move. Trading in the live market will give you the exposure you need to make more money.

With a demo account, you will be able to trade in live markets without having to spend a dollar. Most brokers give you thousands of dollars in dummy money to partake in live markets.

Using a mobile application that makes it easy to switch between real and demo accounts can help make quick profits.

#8 Notifications

Another big advantage of mobile applications is that the best ones send you notifications to alert you of changes in market conditions and the execution of trades.

Using a mobile app that gives you extensive control over alerts can enable you to make profitable trades throughout the day.

(Risk warning: Your capital can be at risk)

Trade execution speed compared

From our experience, the execution speed on binary trading apps is much lower than on desktop trading. However, the execution speed is still an important factor to consider when picking a mobile app. We tested and compared each execution process of the binary apps in our comparison:

| App: | Execution speed: |

|---|---|

| Pocket Option | 0.42 ms |

| IQ Option | 0.45 ms |

| Quotex | 0.41 ms |

| Deriv | 1.11 ms |

| Olymp Trade | 1.32 ms |

| Exnova | 0.98 ms |

| Expert Option | 0.72 ms |

Pros and cons of using Binary trading apps

Let’s face it. Trading binary options from an app can be very appealing. Especially when you consider that 20 years ago it was not possible to take out your smartphone and trade. It was digitalization that made this step possible. So, these apps offer a very modern and empowering way to make profits without being restricted.

Many digital nomads have already taken advantage of these opportunities and made millions of US dollars using their mobile phones. Trading binary options can certainly be a way to make money if you make the right predictions and are consistent.

But it takes more than just luck. You have to make the right decisions. Analysis tools can help you better understand what is happening in the market. A big disadvantage of binary options trading with an app is that you don’t have access to several different analysis tools as clearly as you can on a PC. You are also often distracted by notifications. Therefore, you need to ask yourself whether PC trading or mobile trading is better for you.

Here we have summarized some of the pros and cons of trading binary options with an app:

- Trade on the go

- No local restrictions

- You literally have your success in the palm of your hand

- Less distraction than on the computer

- High profits possible

- You can set signals

- You can react to market signals from everywhere

- High flexibility

- Easy to use

- Uncluttered

- Small screen

- Many analysis tools take a long time to open

- You cannot open several charts at the same time

- The commitment with a professional PC setup is often bigger than on the mobile phone

- Much distraction through notifications

We recommend having a good app for trading binary options. It can be a valuable addition to trading on the PC.

Binary trading app download – Step-by-step tutorial

Once you understand how it works, downloading a binary options app is not difficult. We have briefly summarized all the steps you need to take.

Step 1 – Register with a broker

The first step is to register with a binary options broker. You can use any broker of your choice. In this example, we have used Pocket Option. Just visit their website and open an account. Once you have done so, continue with the second step.

(Risk warning: Your capital can be at risk)

Step 2 – Login to the broker

The second step is to log in. Sometimes you have to go through a verification process, but this depends on your broker. Once you have successfully completed this step, you will have an account that you can use to log into the application.

Step 3 – Go to the download page and select the app

The third step is to go to the platform’s download page to download the app to your phone. Once you’ve done this and the app is installed on your phone, you only need to log in with your newly created account when you want to make a deposit. As most brokers offer free demo accounts, you can also start with a practice account.

Step 4 – Start trading!

Now log in with the account details you created in Step 1 and start trading. Be sure to check out the best binary options strategies before making your first trade. On this website, we provide you with the best binary options tools and helpful insights. Good luck with your first trades.

Are Binary Options apps secure?

Yes, Binary Options apps are secure when they are available in the official App Store (iOS) and Play Store (Android). They are checked for security by Apple and Google. If you are using the official websites, the chance of getting a virus on your mobile phone is near zero.

However, you need to be careful with 3rd party downloads. We do not recommend downloading a binary trading app from unknown websites because it can be infected with malware, and there is a high chance that all of your data will get stolen by criminals. Binary trading apps are safe, but only if you download them from official sources.

Which assets can you trade on a Binary Options app?

You can always trade the same assets that are offered on the desktop versions. The broker of your choice limits the asset selection. The most common assets are:

- Cryptocurrencies

- Currencies (Forex)

- Indices

- Stocks

- ETFs

- Commodities

On average, most binary apps are offering around 100, sometimes up to 300 assets to trade.

Alternatives to binary trading apps:

Binary Options trading apps are not available in every country, so sometimes alternatives are needed. The best alternative to Binary Options trading is to trade Forex, CFDs, or stocks. The following 2 brokers are the best alternatives:

| Pocket Option: | RoboForex | |

|---|---|---|

| Platforms: | MetaTrader 4, MetaTrader 5 (for Forex) | MetaTrader 4, MetaTrader 5 (for Forex, CFDs, Stocks |

| Bonuses: | Yes | Yes |

| Minimum deposit: | $ 50 | $ 10 |

| Demo account: | Yes, unlimited | Yes, unlimited |

| Leverage: | Up to 1:500 | Up to 1:2000 |

| Special: | Instant deposit | Cent accounts and ECN trading |

Conclusion: Pocket Option offers the best Binary trading app

Making money trading binary options on the move does not need to be a pipedream. Now that you’re acquainted with the best binary options trading apps, you can use our guide and comparison to find the right app quickly.

While every app on our list offers a unique set of perks, the Pocket Option app has the most balanced feature set of them all. With 100+ assets offered, social trading, high returns, and app availability on all platforms, it stands out as the go-to app for traders of all skill levels.

If you want to trade binaries on a broader range of assets, you can consider making an account with Pocket Option, which is offering around 130+ assets to trade.

It is essential that you bear your needs and preferences in mind when picking a binary options trading app. What’s right for one trader isn’t always right for another.

All that’s left to do is download the app you like the most and sign up, so you can get started with trading binary options.

In conclusion, these are our favorite binary apps, in the correct order:

- Pocket Option – The best app for any device with the highest return

- IQ Option – User-friendly and the minimum deposit starts at only $10

- Quotex – Free signals via the app

- Deriv – Supports multiple devices and automated trading

- Olymp Trade – Good education and different account types

- Exnova – New binary trading app with high return

- Expert Option – Good option for short-term trading

(Risk warning: Your capital can be at risk)

Most asked questions about Binary Options apps:

Which app is the best for binary trading?

It is important to understand that there is no “best binary options app”. However, our recommendation is to take a look at different ones and find one that suits you best. We recommend the Pocket Option app as it is particularly easy to use, offers good trading conditions, and makes a solid overall impression. Ultimately, the question of which trading app is best depends on your needs and the device you have. Please note that not all trading apps are equally available on Android and iOS, so the choice might differ from person to person. We recommend Pocket Option as it is available for both iOS and Android and offers an excellent experience.

Are binary options apps legal or not?

Yes, binary options trading apps are legal. However, it depends on which country you are in. In Europe, it is generally difficult to trade binary options, as strict rules apply here. However, if you live in other countries, you can use these apps to make profits without any problems. Some websites claim that trading binary options via an app works with a VPN. Whether and how well this works, you have to test yourself. We recommend the app Pocket Option.

How to download binary trading apps?

Downloading an app to trade binary options is easier than you think. Click on the broker, go to the download section, and download the app for your smartphone. On this site, we have provided you a step-by-step tutorial. Many trading apps are also available from the Google Play Store or the Apple Store. In this article, we have highlighted the best apps that are easy to download.

Can I test binary options apps for free?

While many brokers offering binary options trading are dubious and only offer paid versions, there are some that offer trading for free. Our favourite is Pocket Option. You can trade on a demo account for as long as you like with no risk. There is no obligation to deposit real money, which means you can trade and practice trading completely free of charge. You can test binary options apps for free!